By Yvonne Hofstetter, CEO

Photo: Universitätsbibliothek Heidelberg

Innovation is both a basic human need and capability. Innovation is a direct consequence of the fact that man is able to question given circumstances, even facts, aiming to grow beyond supposed limitations and to make inventions of all kinds.

But innovation is not just about technical innovation. Techno-social or social innovations are also innovations if they are new and prevail across the entire society, i.e. if they are widely adopted as a cultural achievement. This shows that innovation is more than just a research result being examined by academia and often remaining there - that is, it is not being carried into the market, a risky challenge that particularly startups meet everywhere across the globe.

Different classes of innovation

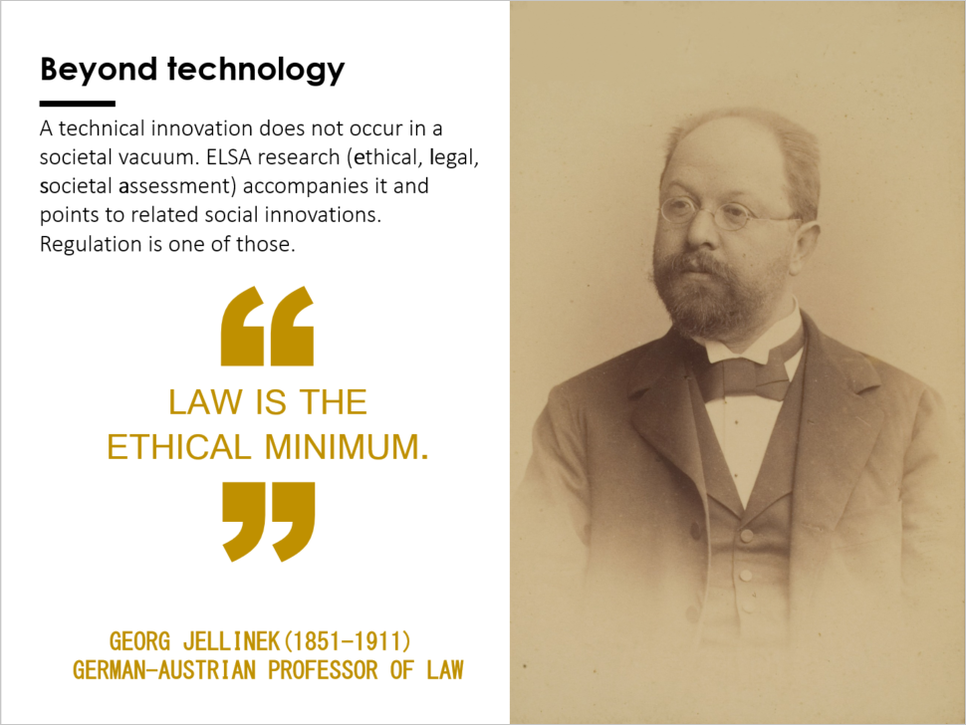

Technical innovations often precede social innovations, accompany them or are required after a technical innovation took place. The consequences of technology often require society to transform itself in order to cope with such consequences. This also applies to Artificial Intelligence (AI). Consequently, initiatives around Ethical AI have been sprouting up around the world for about 4 years.

Anyone who scans the round about 100 documents that have been published by governments, NGOs or private organizations on the subject of Ethical AI will see thematic convergence on a meta-level, but also plenty of confusion. Calls for further technical innovations - such as Explainable AI - are just as loud as calls for political innovation, such as new laws on liability for AI operations. Scientists no longer remain objective, have become political across the board and aim to shape society - with all the problems which a political stance of science contributes, such as political bias in research. Ethics committees and expert groups put pressure on decision-makers to implement certain norms – but they are neither democratically staffed nor do they carry the authority to act in a morally paternalistic way. And how requirements for Ethical AI can be reconciled with an agile management process and value-based technical design is far from being anchored in practice.

ethical, legal, and societally valuable AI shall be put in context

Until 2024, Prof. Yvonne Hofstetter, CEO of 21strategies and author, will work on examining, categorizing and framing societal innovations for AI in the context of air defense. This work will not only put the classes and categories of innovation in context. It will document the technical-material innovation of a very concrete tech stack; it aims at proposing an organizational innovation which embeds the technical-material innovation into a value-based design approach within an agile process model; and it will think about possible political innovations around the said very concrete tech stack.

If, for example, the exploratory ability of Reinforcement Learning is inherent in the software, will policy makers generally forbid this form of Machine Learning by law? Ultimately, exploration means that a machine can behave completely unexpectedly and thus does not function “reliably”. AI reliability, however, is a key requirement of AI ethics councils and working groups.

The results of this work will be documented and published - deliberately without the now widespread political claim of science to shape or transform society. One demand remains, though – it is what 21strategies demands from itself: striving for highest quality of AI. Anyone who not only regards AI as a mere material technical innovation, but also thinks in other categories of innovation, will build a better AI.

Find out how 21strategies deploys of artificial intelligence and algorithmic decision support systems by visiting our website.